| | |

```

In this scenario, Task 3 in the "Code Review" column requires human intervention. This is similar to how HITL works in AI workflows, where certain tasks need human input or validation before proceeding.

### How HITL Works in KaibanJS

1. **Task Creation**: Tasks are created and can be flagged to require human validation.

2. **AI Processing**: AI agents work on tasks, moving them through different states (like "To Do" to "In Progress").

3. **Human Intervention Points**:

- Tasks enter 'AWAITING_VALIDATION' state when they need human review.

- Humans can provide feedback on tasks in 'BLOCKED', 'AWAITING_VALIDATION', or even 'DONE' states.

4. **Feedback or Validation**:

- Humans can validate (approve) a task or provide feedback for revisions.

- Feedback can be given to guide the AI's next steps or to request changes.

5. **Feedback Processing**:

- If feedback is provided, the task moves to 'REVISE' state.

- The AI agent then addresses the feedback, potentially moving the task back to 'DOING'.

6. **Completion**: Once validated, tasks move to the 'DONE' state.

This process ensures that human expertise is incorporated at crucial points, improving the overall quality and reliability of the AI-driven workflow.

### This HITL workflow can be easily implemented using KaibanJS.

The library provides methods and status to manage the entire process programmatically:

- Methods: `validateTask()`, `provideFeedback()`, `getTasksByStatus()`

- Task Statuses: TODO, DOING, BLOCKED, REVISE, AWAITING_VALIDATION, VALIDATED, DONE

- Workflow Status: INITIAL, RUNNING, BLOCKED, FINISHED

- Feedback History: Each task contains a `feedbackHistory` array, with entries consisting of:

- `content`: The human input or decision

- `status`: PENDING or PROCESSED

- `timestamp`: When the feedback was created or last updated

## Example Usage

Here's an example of how to set up and manage a HITL workflow using KaibanJS:

```js

// Creating a task that requires validation

const task = new Task({

title: "Analyze market trends",

description: "Research and summarize current market trends for our product",

assignedTo: "Alice",

externalValidationRequired: true

});

// Set up the workflow status change listener before starting

team.onWorkflowStatusChange((status) => {

if (status === 'BLOCKED') {

// Check for tasks awaiting validation

const tasksAwaitingValidation = team.getTasksByStatus('AWAITING_VALIDATION');

tasksAwaitingValidation.forEach(task => {

console.log(`Task awaiting validation: ${task.title}`);

// Example: Automatically validate the task

team.validateTask(task.id);

// Alternatively, provide feedback if the task needs revision

// This could be based on human review or automated criteria

// team.provideFeedback(task.id, "Please include more data on competitor pricing.");

});

} else if (status === 'FINISHED') {

console.log('Workflow completed successfully!');

console.log('Final result:', team.getWorkflowResult());

}

});

// Start the workflow and handle the promise

team.start()

.then((output) => {

if (output.status === 'FINISHED') {

console.log('Workflow completed with result:', output.result);

}

})

.catch(error => {

console.error('Workflow encountered an error:', error);

});

```

## Task Statuses

The system includes the following task statuses, which apply to all tasks throughout the entire workflow, including but not limited to HITL processes:

- `TODO`: Task is queued for initiation, awaiting processing.

- `DOING`: Task is actively being worked on by an agent.

- `BLOCKED`: Progress on the task is halted due to dependencies or obstacles.

- `REVISE`: Task requires additional review or adjustments based on feedback.

- `AWAITING_VALIDATION`: Task is completed but requires validation or approval.

- `VALIDATED`: Task has been validated and confirmed as correctly completed.

- `DONE`: Task is fully completed and requires no further action.

These statuses are defined in the [TASK_STATUS_enum](https://github.com/kaiban-ai/KaibanJS/blob/main/src/utils/enums.js#L58) and can be accessed throughout the system for consistency.

## Task State Flow Diagram

Below is a text-based representation of the task state flow diagram:

```

+---------+

| TODO |

+---------+

|

v

+---------+

+--->| DOING |

| +---------+

| |

| +----+----+----+----+

| | | |

v v v v

+-------------+ +---------+ +------------------+

| BLOCKED | | REVISE | | AWAITING_ |

+-------------+ +---------+ | VALIDATION |

| | +------------------+

| | |

| | v

| | +--------------+

| | | VALIDATED |

| | +--------------+

| | |

| | v

+--------------+----------------+

|

v

+---------+

| DONE |

+---------+

```

## Task State Transitions

1. **TODO to DOING**:

- When a task is picked up by an agent to work on.

2. **DOING to BLOCKED**:

- If the task encounters an obstacle or dependency that prevents progress.

3. **DOING to AWAITING_VALIDATION**:

- Only for tasks created with `externalValidationRequired = true`.

- Occurs when the agent completes the task but it needs human validation.

4. **DOING to DONE**:

- For tasks that don't require external validation.

- When the agent successfully completes the task.

5. **AWAITING_VALIDATION to VALIDATED**:

- When a human approves the task without changes.

6. **AWAITING_VALIDATION to REVISE**:

- If the human provides feedback or requests changes during validation.

7. **VALIDATED to DONE**:

- Automatic transition after successful validation.

8. **REVISE to DOING**:

- When the agent starts working on the task again after receiving feedback.

9. **BLOCKED to DOING**:

- When the obstacle is removed and the task can proceed.

## HITL Workflow Integration

1. **Requiring External Validation**:

- Set `externalValidationRequired: true` when creating a task to ensure it goes through human validation before completion.

2. **Initiating HITL**:

- Use `team.provideFeedback(taskId, feedbackContent)` at any point to move a task to REVISE state.

3. **Validating Tasks**:

- Use `team.validateTask(taskId)` to approve a task, moving it from AWAITING_VALIDATION to VALIDATED, then to DONE.

- Use `team.provideFeedback(taskId, feedbackContent)` to request revisions, moving the task from AWAITING_VALIDATION to REVISE.

4. **Processing Feedback**:

- The system automatically processes feedback given through the `provideFeedback()` method.

- Agents handle pending feedback before continuing with the task.

## Feedback in HITL

In KaibanJS, human interventions are implemented through a feedback system. Each task maintains a `feedbackHistory` array to track these interactions.

### Feedback Structure

Each feedback entry in the `feedbackHistory` consists of:

- `content`: The human input or decision

- `status`: The current state of the feedback

- `timestamp`: When the feedback was created or last updated

### Feedback Statuses

KaibanJS uses two primary statuses for feedback:

- `PENDING`: Newly added feedback that hasn't been addressed yet

- `PROCESSED`: Feedback that has been successfully addressed and incorporated

This structure allows for clear tracking of human interventions and their resolution throughout the task lifecycle.

## React Example

```jsx

import React, { useState, useEffect } from 'react';

import { team } from './teamSetup'; // Assume this is where your team is initialized

function WorkflowBoard() {

const [tasks, setTasks] = useState([]);

const [workflowStatus, setWorkflowStatus] = useState('');

const store = team.useStore();

useEffect(() => {

const unsubscribeTasks = store.subscribe(

state => state.tasks,

(tasks) => setTasks(tasks)

);

const unsubscribeStatus = store.subscribe(

state => state.teamWorkflowStatus,

(status) => setWorkflowStatus(status)

);

return () => {

unsubscribeTasks();

unsubscribeStatus();

};

}, []);

const renderTaskColumn = (status, title) => (

{title}

{tasks.filter(task => task.status === status).map(task => (

{task.title}

{task.description}

{status === 'AWAITING_VALIDATION' && (

store.getState().validateTask(task.id)}>

Validate

)}

store.getState().provideFeedback(task.id, "Sample feedback")}>

Provide Feedback

))}

);

return (

Task Board - Workflow Status: {workflowStatus}

{renderTaskColumn('TODO', 'To Do')}

{renderTaskColumn('DOING', 'In Progress')}

{renderTaskColumn('BLOCKED', 'Blocked')}

{renderTaskColumn('AWAITING_VALIDATION', 'Waiting for Feedback')}

{renderTaskColumn('DONE', 'Done')}

store.getState().startWorkflow()}>Start Workflow

);

}

export default WorkflowBoard;

```

## Conclusion

By implementing Human in the Loop through KaibanJS's feedback and validation system, you can create a more robust, ethical, and accurate task processing workflow. This feature ensures that critical decisions benefit from human judgment while maintaining the efficiency of automated processes for routine operations.

:::tip[We Love Feedback!]

Is there something unclear or quirky in the docs? Maybe you have a suggestion or spotted an issue? Help us refine and enhance our documentation by [submitting an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues). We’re all ears!

:::

### ./src/get-started/01-Quick Start.md

//--------------------------------------------

// File: ./src/get-started/01-Quick Start.md

//--------------------------------------------

---

title: Quick Start

description: Get started with KaibanJS in under 1 minute. Learn how to set up your Kaiban Board, create AI agents, watch them complete tasks in real-time, and deploy your board online.

---

# Quick Start Guide

Get your AI-powered workflow up and running in minutes with KaibanJS!

:::tip[Try it Out in the Playground!]

Before diving into the installation and coding, why not experiment directly with our interactive playground? [Try it now!](https://www.kaibanjs.com/share/f3Ek9X5dEWnvA3UVgKUQ)

:::

## Quick Demo

Watch this 1-minute video to see KaibanJS in action:

VIDEO

## Prerequisites

- Node.js (v14 or later) and npm (v6 or later)

- An API key for OpenAI or Anthropic, Google AI, or another [supported AI service](../llms-docs/01-Overview.md).

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

## Setup

**1. Run the KaibanJS initializer in your project directory:**

```bash

npx kaibanjs@latest init

```

This command sets up KaibanJS in your project and opens the Kaiban Board in your browser.

**2. Set up your API key:**

- Create or edit the `.env` file in your project root

- Add your API key (example for OpenAI):

```

VITE_OPENAI_API_KEY=your-api-key-here

```

**3. Restart your Kaiban Board:**

```bash

npm run kaiban

```

## Using Your Kaiban Board

1. In the Kaiban Board, you'll see a default example workflow.

2. Click "Start Workflow" to run the example and see KaibanJS in action.

3. Observe how agents complete tasks in real-time on the Task Board.

4. Check the Results Overview for the final output.

## Customizing Your Workflow

1. Open `team.kban.js` in your project root.

2. Modify the agents and tasks to fit your needs. For example:

```javascript

import { Agent, Task, Team } from 'kaibanjs';

const researcher = new Agent({

name: 'Researcher',

role: 'Information Gatherer',

goal: 'Find latest info on AI developments'

});

const writer = new Agent({

name: 'Writer',

role: 'Content Creator',

goal: 'Summarize research findings'

});

const researchTask = new Task({

description: 'Research recent breakthroughs in AI',

agent: researcher

});

const writeTask = new Task({

description: 'Write a summary of AI breakthroughs',

agent: writer

});

const team = new Team({

name: 'AI Research Team',

agents: [researcher, writer],

tasks: [researchTask, writeTask]

});

export default team;

```

3. Save your changes and the Kaiban Board will automatically reload to see your custom workflow in action.

## Deploying Your Kaiban Board

To share your Kaiban Board online:

1. Run the deployment command:

```bash

npm run kaiban:deploy

```

2. Follow the prompts to deploy your board to Vercel.

3. Once deployed, you'll receive a URL where your Kaiban Board is accessible online.

This allows you to share your AI-powered workflows with team members or clients, enabling collaborative work on complex tasks.

## Quick Tips

- Use `npm run kaiban` to start your Kaiban Board anytime.

- Press `Ctrl+C` in the terminal to stop the Kaiban Board.

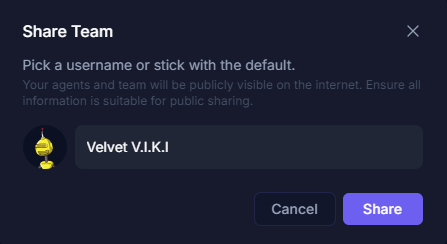

- Click "Share Team" in the Kaiban Board to generate a shareable link.

- Access our LLM-friendly documentation at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt) to integrate with your AI IDE or LLM tools.

## AI Development Integration

KaibanJS documentation is available in an LLM-friendly format, making it easy to:

- Feed into AI coding assistants for context-aware development

- Use with AI IDEs for better code completion and suggestions

- Integrate with LLM tools for automated documentation processing

Simply point your AI tool to `https://docs.kaibanjs.com/llms-full.txt` to access our complete documentation in a format optimized for large language models.

## Flexible Integration

KaibanJS isn't limited to the Kaiban Board. You can integrate it directly into your projects, create custom UIs, or run agents without a UI. Explore our tutorials for [React](./05-Tutorial:%20React%20+%20AI%20Agents.md) and [Node.js](./06-Tutorial:%20Node.js%20+%20AI%20Agents.md) integration to unleash the full potential of KaibanJS in various development contexts.

## Next Steps

- Experiment with different agent and task combinations.

- Try integrating KaibanJS into your existing projects.

- Check out the full documentation for advanced features.

For more help or to connect with the community, visit [kaibanjs.com](https://www.kaibanjs.com).

:::tip[We Love Feedback!]

Is there something unclear or quirky in the docs? Maybe you have a suggestion or spotted an issue? Help us refine and enhance our documentation by [submitting an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues). We're all ears!

:::

### ./src/get-started/02-Core Concepts Overview.md

//--------------------------------------------

// File: ./src/get-started/02-Core Concepts Overview.md

//--------------------------------------------

---

title: Core Concepts Overview

description: A brief introduction to the fundamental concepts of KaibanJS - Agents, Tasks, Teams, and Tools.

---

VIDEO

KaibanJS is built around four primary components: `Agents`, `Tools`, `Tasks`, and `Teams`. Understanding how these elements interact is key to leveraging the full power of the framework.

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

### Agents

Agents are the autonomous actors in KaibanJS. They can:

- Process information

- Make decisions

- Execute actions

- Interact with other agents

Each agent has:

- A unique role or specialty

- Defined capabilities

- Specific goals or objectives

### Tools

Tools are specific capabilities or functions that agents can use to perform their tasks. They:

- Extend an agent's abilities

- Can include various functionalities like web searches, data processing, or external API interactions

- Allow for customization and specialization of agents

### Tasks

Tasks represent units of work within the system. They:

- Encapsulate specific actions or processes

- Can be assigned to agents

- Have defined inputs and expected outputs

- May be part of larger workflows or sequences

### Teams

Teams are collections of agents working together. They:

- Combine diverse agent capabilities

- Enable complex problem-solving

- Facilitate collaborative workflows

### How Components Work Together

1. **Task Assignment**: Tasks are created and assigned to appropriate agents or teams.

2. **Agent Processing**: Agents analyze tasks, make decisions, and take actions based on their capabilities, tools, and the task requirements.

3. **Tool Utilization**: Agents use their assigned tools to gather information, process data, or perform specific actions required by their tasks.

4. **Collaboration**: In team settings, agents communicate and coordinate to complete more complex tasks, often sharing the results of their tool usage.

5. **Workflow Execution**: Multiple tasks can be chained together to form workflows, allowing for sophisticated, multi-step processes.

6. **Feedback and Iteration**: Results from completed tasks can inform future actions or trigger new tasks, creating dynamic and adaptive systems.

By combining these core concepts, KaibanJS enables the creation of flexible, intelligent systems capable of handling a wide range of applications, from simple automation to complex decision-making processes. The integration of Tools with Agents, Tasks, and Teams allows for highly customizable and powerful AI-driven solutions.

:::tip[We Love Feedback!]

Is there something unclear or quirky in the docs? Maybe you have a suggestion or spotted an issue? Help us refine and enhance our documentation by [submitting an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues). We’re all ears!

:::

### ./src/get-started/03-The Kaiban Board.md

//--------------------------------------------

// File: ./src/get-started/03-The Kaiban Board.md

//--------------------------------------------

---

title: The Kaiban Board

description: Transform your AI workflow with Kaiban Board. Easily create, visualize, and manage AI agents locally, then deploy with a single click. Your all-in-one solution for intuitive AI development and deployment.

---

VIDEO

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

## From Kanban to Kaiban: Evolving Workflow Management for AI

The Kaiban Board draws inspiration from the time-tested [Kanban methodology](https://en.wikipedia.org/wiki/Kanban_(development)), adapting it for the unique challenges of AI agent management.

But what exactly is Kanban, and how does it translate to the world of AI?

### The Kanban Methodology: A Brief Overview

Kanban, Japanese for "visual signal" or "card," originated in Toyota's manufacturing processes in the late 1940s. It's a visual system for managing work as it moves through a process, emphasizing continuous delivery without overburdening the development team.

Key principles of Kanban include:

- Visualizing workflow

- Limiting work in progress

- Managing flow

- Making process policies explicit

- Implementing feedback loops

> If you have worked with a team chances are you have seen a kanban board in action. Popular tools like Trello, ClickUp, and Jira use kanban to help teams manage their work.

## KaibanJS: Kanban for AI Agents

KaibanJS takes the core principles of Kanban and applies them to the complex world of AI agent management. Just as Kanban uses cards to represent work items, KaibanJS uses powerful, state management techniques to represent AI agents, their tasks, and their current states.

With KaibanJS, you can:

- Create, visualize, and manage AI agents, tasks, and teams

- Orchestrate your AI agents' workflows

- Visualize your AI agents' workflows in real-time

- Track the progress of tasks as they move through various stages

- Identify bottlenecks and optimize your AI processes

- Collaborate more effectively with your team on AI projects

By representing agentic processes in a familiar Kanban-style board, KaibanJS makes it easier for both technical and non-technical team members to understand and manage complex AI workflows.

## The Kaiban Board: Your AI Workflow Visualization Center

The Kaiban Board serves as a visual representation of your AI agent workflows powered by the KaibanJS framework. It provides an intuitive interface that allows you to:

1. **Visualize AI agents** created and configured through KaibanJS

2. **Monitor agent tasks and interactions** in real-time

3. **Track progress** across different stages of your AI workflow

4. **Identify issues** quickly for efficient troubleshooting

The KaibanJS framework itself enables you to:

- **Create and configure AI agents** programmatically

- **Deploy your AI solutions** with a simple command

Whether you're a seasoned AI developer or just getting started with multi-agent systems, the combination of the Kaiban Board for visualization and KaibanJS for development offers a powerful yet accessible way to manage your AI projects.

Experience the Kaiban Board for yourself and see how it can streamline your AI development process. Visit our [playground](https://www.kaibanjs.com/playground) to get started today!

:::tip[We Love Feedback!]

Spotted something funky in the docs? Got a brilliant idea? We're all ears! [Submit an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues) and help us make KaibanJS even more awesome!

:::

### ./src/get-started/04-Using the Kaiban Board.md

//--------------------------------------------

// File: ./src/get-started/04-Using the Kaiban Board.md

//--------------------------------------------

---

title: Using the Kaiban Board

description: Master the intuitive Kaiban Board interface. Learn to effortlessly create, monitor, and manage AI workflows through our powerful visual tools. Perfect for teams looking to streamline their AI development process.

---

Welcome to the Kaiban Board - your visual command center for AI agent workflows! This guide will walk you through the key features of our intuitive interface, helping you get started quickly.

VIDEO

**Try It Live!**

Experience the Kaiban Board [here](https://www.kaibanjs.com/playground).

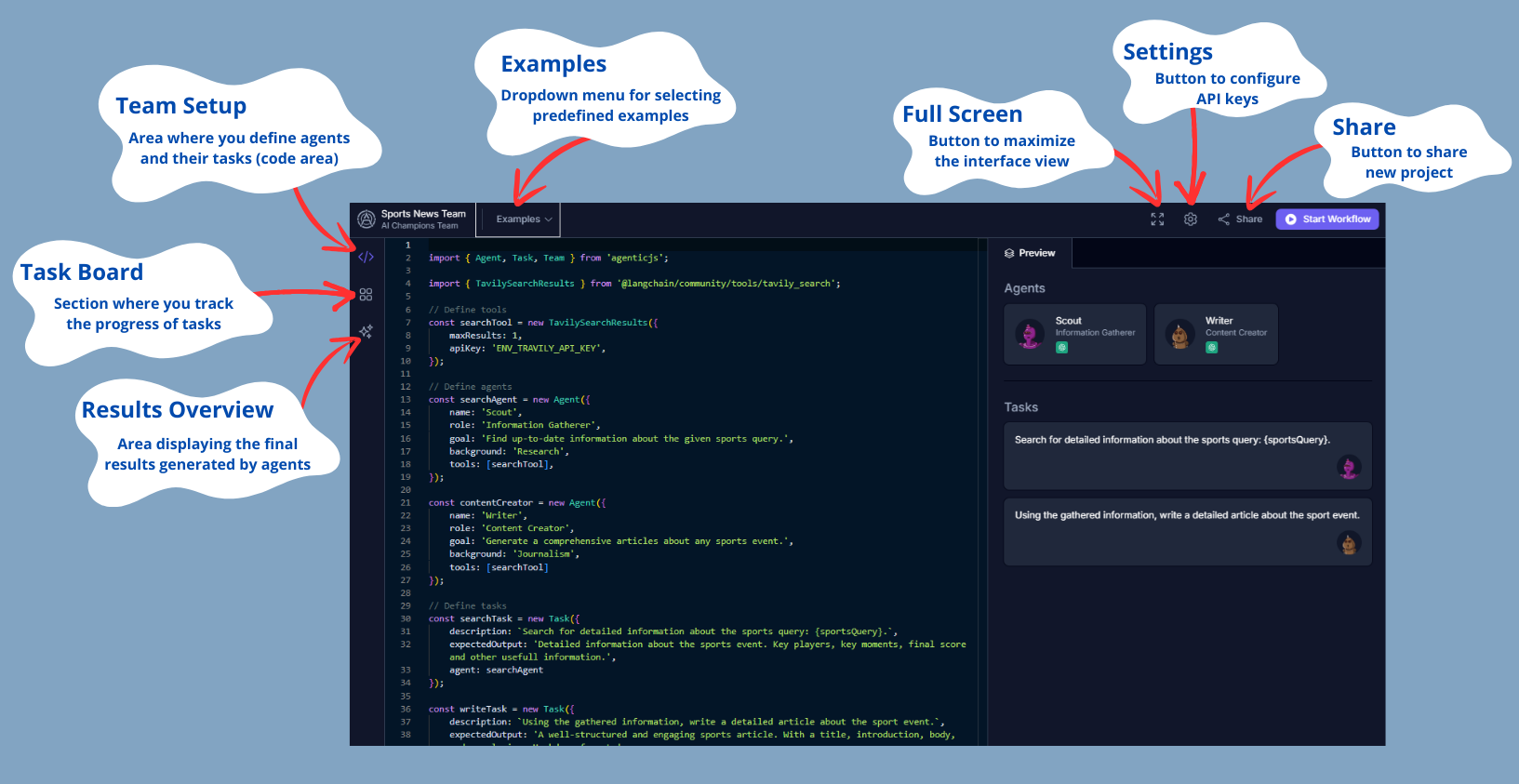

## Interface Overview

The Kaiban Board is divided into 3 main sections:

1. **Team Setup**

2. **Task Board**

3. **Results Overview**

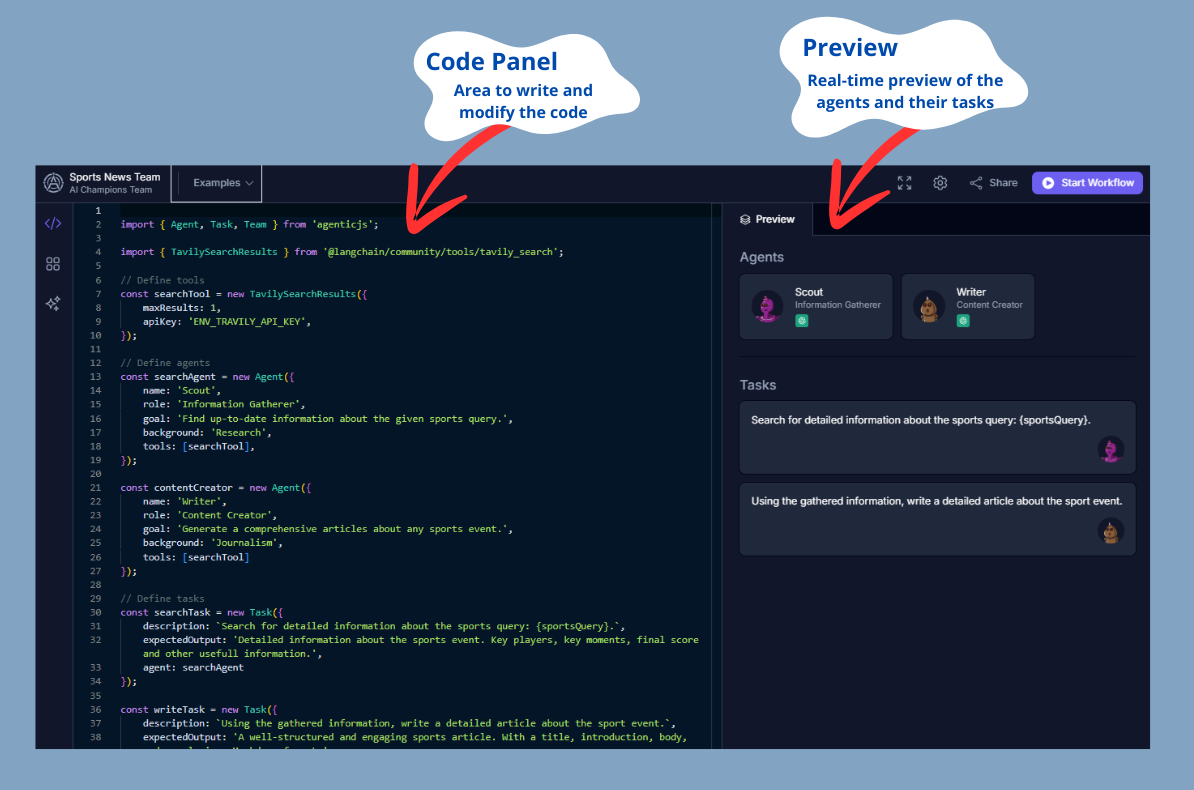

## 1. Team Setup

The "Team Setup" area is where you define the agents and their tasks. Although we won't discuss code in this tutorial, it's important to know that this is where you configure the agents' behavior. By default, there will always be an example selected in this area.

- **Code Panel**: On the left, you can view and edit the code that defines the tools, agents, and tasks.

- **Preview**: On the right, you can see a real-time preview of the configured agents, including their names, roles, and the tasks they will perform.

At the top of the interface, you will find a dropdown menu called **"Examples."** Here you can select from several predefined examples to view and execute. These examples are ready to use and help you understand how multi-agent systems are configured.

## 2. Task Board

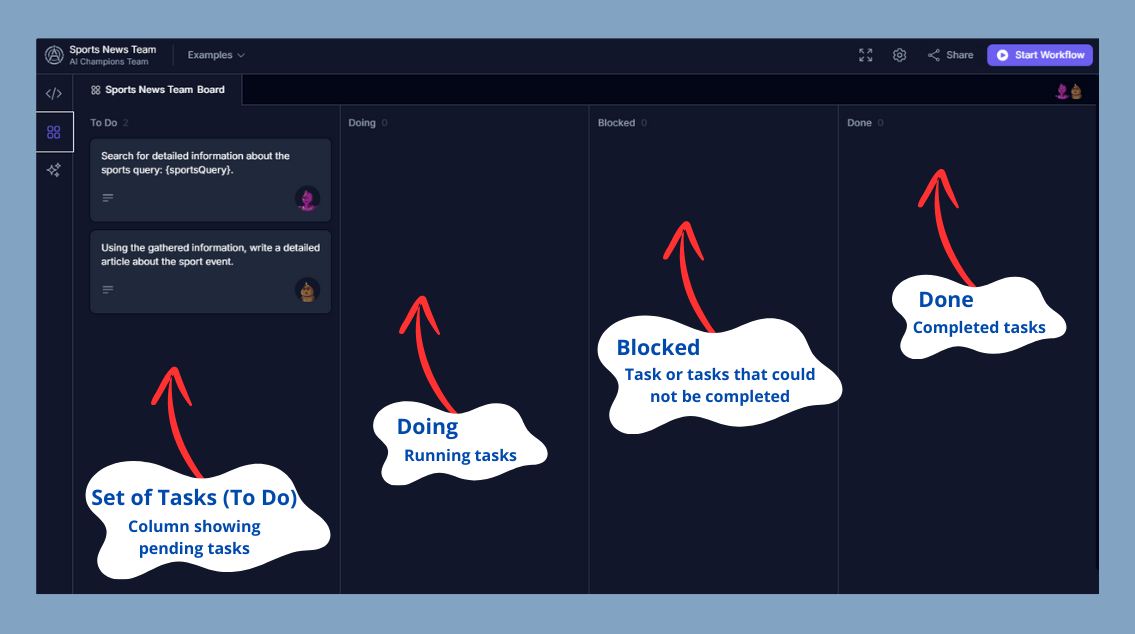

The "Task Board" is a crucial section where you can track the progress of tasks assigned to the agents. It is like a Trello or Jira Kanban board but for AI Agents.

### 2.1. Task Panel

This panel organizes tasks into several columns:

- **To Do**: Pending tasks.

- **Doing**: Tasks in progress.

- **Blocked**: Tasks that cannot proceed due to a blockage.

- **Done**: Completed tasks.

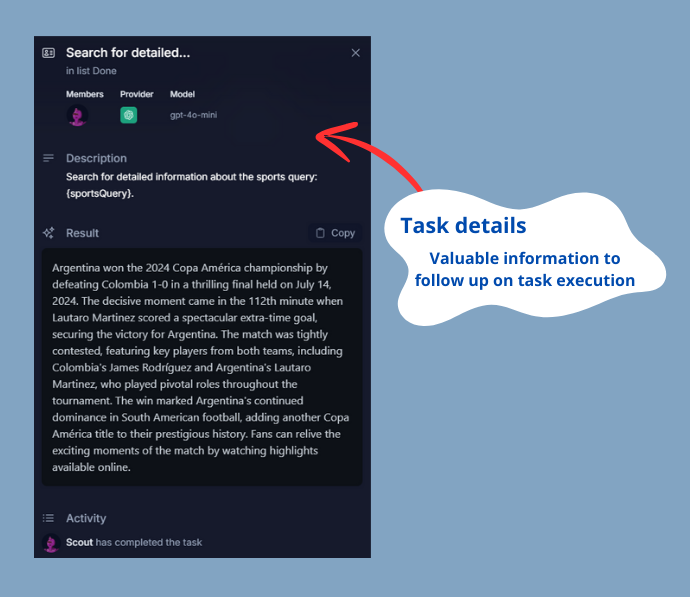

### 2.2. Task Details

For each task, you can see additional details such as its description, the activities carried out, the progress of execution, and partial results. The detailed view includes:

- **Members**: Shows the agents assigned to the task along with their roles, helping you understand who is responsible for each part of the task.

- **Provider**: Indicates the AI service provider being used, ensuring you know which backend is powering the task.

- **Model**: Displays the specific AI model utilized, giving insight into the type of processing being applied.

- **Description**: Provides a brief but detailed overview of what the task aims to achieve, ensuring clarity of purpose.

- **Result**: Shows the outcome generated by the agent, which can be copied for further use. This is where you see the final output based on the agent's processing.

- **Activity**: Lists all the steps and actions taken by the agent during the task. This log includes statuses and updates, providing a comprehensive view of the task's progress and any issues encountered.

### 2.3. General Activity

You can also see an overview of all agents' activities by clicking the "Activity" button.

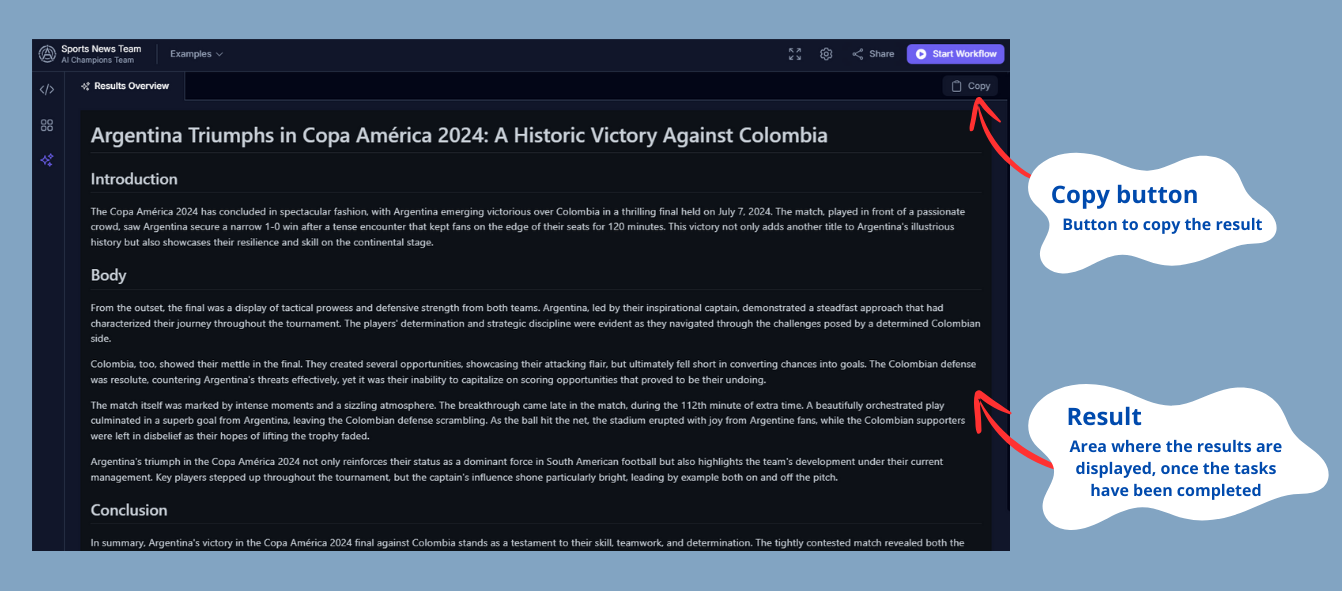

## 3. Results Overview

The **"Results Overview"** area displays the final results generated by the agents once they complete their tasks.

- **Results**: Here you will find the reports generated or any other output produced by the agents. You can copy these results directly from the interface for further use.

## Control Toolbar (Top Right)

Besides the main sections, the interface includes some important additional features:

1. **Share Team**: The "Share" button allows you to generate a link to share your current agent configuration with others. You can name your multi-agent system and easily share it.

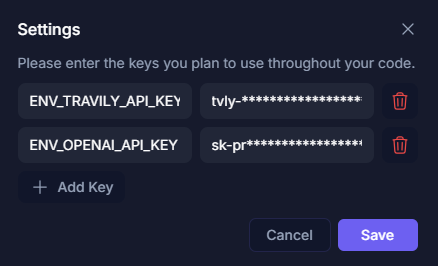

2. **API Keys Configuration**: The settings button allows the user to enter their API Keys to change the AI model used. This provides flexibility to work with different AI service providers according to the project's needs.

3. **Full Screen**: The full-screen button allows you to maximize the interface for a more comprehensive and detailed view of the playground. This is especially useful when working with complex configurations and needing more visual space.

4. **Start Workflow**: This button initiates the execution of the tasks by the agents defined in your code.

## Basic Interface Usage

1. **Create and Configure Agents and Their Tasks**:

- Modify the default code or copy new code to create and configure agents. Observe how it reflects in real-time in the preview.

2. **Start the Workflow**:

- Press "Start Workflow" to begin executing the tasks.

3. **Monitor Progress**:

- Use the Task Board to track the progress of tasks and check specific details if needed.

4. **Review Results**:

- Once tasks are completed, review the results in the "Results Overview" area.

5. **Share and Configure**:

- Use the "Share" and "Settings" buttons to share your project and configure your API Keys.

## Conclusion

The Kaiban Board simplifies AI integration, enabling you to visualize, manage, and share AI agents with ease. Ideal for developers, project managers, and researchers, this tool facilitates efficient operation and collaboration without the need for complex setups. Enhance your projects by leveraging the power of AI with our user-friendly platform.

:::tip[We Love Feedback!]

Is there something unclear or quirky in the docs? Maybe you have a suggestion or spotted an issue? Help us refine and enhance our documentation by [submitting an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues). We're all ears!

:::

### ./src/get-started/05-Tutorial: React + AI Agents.md

//--------------------------------------------

// File: ./src/get-started/05-Tutorial: React + AI Agents.md

//--------------------------------------------

---

title: React + AI Agents (Tutorial)

description: A step-by-step guide to creating your first project with KaibanJS, from setup to execution using Vite and React.

---

Welcome to our tutorial on integrating KaibanJS with React using Vite to create a dynamic blogging application. This guide will take you through setting up your environment, defining AI agents, and building a simple yet powerful React application that utilizes AI to research and generate blog posts about the latest news on any topic you choose.

By the end of this tutorial, you will have a solid understanding of how to leverage AI within a React application, making your projects smarter and more interactive.

:::tip[For the Lazy: Jump Right In!]

If you're eager to see the final product in action without following the step-by-step guide first, we've got you covered. Click the link below to access a live version of the project running on CodeSandbox.

[View the completed project on a CodeSandbox](https://stackblitz.com/~/github.com/kaiban-ai/kaibanjs-react-demo?file=src/App.jsx)

Feel free to return to this tutorial to understand how we built each part of the application step by step!

:::

## Project Setup

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

#### 1. Create a new Vite React project:

```bash

# Create a new Vite project with React template

npm create vite@latest kaibanjs-react-demo -- --template react

cd kaibanjs-react-demo

npm install

# Start the development server

npm run dev

```

#### 2. Install necessary dependencies:

```bash

npm install kaibanjs

# For tool using

npm install @langchain/community --legacy-peer-deps

```

**Note:** You may need to install the `@langchain/community` package with the `--legacy-peer-deps` flag due to compatibility issues.

#### 3. Create a `.env` file in the root of your project and add your API keys:

```

VITE_TRAVILY_API_KEY=your-tavily-api-key

VITE_OPENAI_API_KEY=your-openai-api-key

```

#### To obtain these API keys you must follow the steps below.

**For the Tavily API key:**

1. Visit https://tavily.com/

2. Sign up for an account or log in if you already have one.

3. Navigate to your dashboard or API section.

4. Generate a new API key or copy your existing one.

**For the OpenAI API key:**

1. Go to https://platform.openai.com/

2. Sign up for an account or log in to your existing one.

3. Navigate to the API keys section in your account settings.

4. Create a new API key or use an existing one.

**Note:** Remember to keep these API keys secure and never share them publicly. The `.env` file should be added to your `.gitignore` file to prevent it from being committed to version control. For production environments, consider more secure solutions such as secret management tools or services that your hosting provider might offer.

## Defining Agents and Tools

Create a new file `src/blogTeam.js`. We'll use this file to set up our agents, tools, tasks, and team.

#### 1. First, let's import the necessary modules and set up our search tool:

```javascript

import { Agent, Task, Team } from 'kaibanjs';

import { TavilySearchResults } from '@langchain/community/tools/tavily_search';

// Define the search tool used by the Research Agent

const searchTool = new TavilySearchResults({

maxResults: 5,

apiKey: import.meta.env.VITE_TRAVILY_API_KEY

});

```

#### 2. Now, let's define our agents:

```javascript

// Define the Research Agent

const researchAgent = new Agent({

name: 'Ava',

role: 'News Researcher',

goal: 'Find and summarize the latest news on a given topic',

background: 'Experienced in data analysis and information gathering',

tools: [searchTool]

});

// Define the Writer Agent

const writerAgent = new Agent({

name: 'Kai',

role: 'Content Creator',

goal: 'Create engaging blog posts based on provided information',

background: 'Skilled in writing and content creation',

tools: []

});

```

## Creating Tasks

In the same `blogTeam.js` file, let's define the tasks for our agents:

```javascript

// Define Tasks

const researchTask = new Task({

title: 'Latest news research',

description: 'Research the latest news on the topic: {topic}',

expectedOutput: 'A summary of the latest news and key points on the given topic',

agent: researchAgent

});

const writingTask = new Task({

title: 'Blog post writing',

description: 'Write a blog post about {topic} based on the provided research',

expectedOutput: 'An engaging blog post summarizing the latest news on the topic in Markdown format',

agent: writerAgent

});

```

## Assembling a Team

Still in `blogTeam.js`, let's create our team of agents:

```javascript

// Create the Team

const blogTeam = new Team({

name: 'AI News Blogging Team',

agents: [researchAgent, writerAgent],

tasks: [researchTask, writingTask],

env: { OPENAI_API_KEY: import.meta.env.VITE_OPENAI_API_KEY }

});

export { blogTeam };

```

## Building the React Component

Now, let's create our main React component. Replace the contents of `src/App.jsx` with the following code:

```jsx

import React, { useState } from 'react';

import './App.css';

import { blogTeam } from './blogTeam';

function App() {

// Setting up State

const [topic, setTopic] = useState('');

const [blogPost, setBlogPost] = useState('');

const [stats, setStats] = useState(null);

// Connecting to the KaibanJS Store

const useTeamStore = blogTeam.useStore();

const {

agents,

tasks,

teamWorkflowStatus

} = useTeamStore(state => ({

agents: state.agents,

tasks: state.tasks,

teamWorkflowStatus: state.teamWorkflowStatus

}));

const generateBlogPost = async () => {

// We'll implement this function in the next step

alert('The generateBlogPost function needs to be implemented.');

};

return (

AI Agents News Blogging Team

Generate

Status {teamWorkflowStatus}

{/* Generated Blog Post */}

{blogPost ? (

blogPost

) : (

ℹ️ No blog post available yet Enter a topic and click 'Generate' to see results here.

)}

{/* We'll add more UI elements in the next steps */}

{/* Agents Here */}

{/* Tasks Here */}

{/* Stats Here */}

);

}

export default App;

```

This basic structure sets up our component with state management and a simple UI. Let's break it down step-by-step:

### Step 1: Setting up State

We use the `useState` hook to manage our component's state:

```jsx

const [topic, setTopic] = useState('');

const [blogPost, setBlogPost] = useState('');

const [stats, setStats] = useState(null);

```

These state variables will hold the user's input topic, the generated blog post, and statistics about the generation process.

### Step 2: Connecting to the KaibanJS Store

We use the `const useTeamStore = blogTeam.useStore();` to access the current state of our AI team:

```jsx

const {

agents,

tasks,

teamWorkflowStatus

} = useTeamStore(state => ({

agents: state.agents,

tasks: state.tasks,

teamWorkflowStatus: state.teamWorkflowStatus

}));

```

This allows us to track the status of our agents, tasks, and overall workflow.

### Step 3: Implementing the Blog Post Generation Function

Now, let's implement the `generateBlogPost` function:

```jsx

const generateBlogPost = async () => {

setBlogPost('');

setStats(null);

try {

const output = await blogTeam.start({ topic });

if (output.status === 'FINISHED') {

setBlogPost(output.result);

const { costDetails, llmUsageStats, duration } = output.stats;

setStats({

duration: duration,

totalTokenCount: llmUsageStats.inputTokens + llmUsageStats.outputTokens,

totalCost: costDetails.totalCost

});

} else if (output.status === 'BLOCKED') {

console.log(`Workflow is blocked, unable to complete`);

}

} catch (error) {

console.error('Error generating blog post:', error);

}

};

```

This function starts the KaibanJS workflow, updates the blog post and stats when finished, and handles any errors.

### Step 4: Implementing UX Best Practices for System Feedback

In this section, we'll implement UX best practices to enhance how the system provides feedback to users. By refining the UI elements that communicate internal processes, activities, and statuses, we ensure that users remain informed and engaged, maintaining a clear understanding of the application's operations as they interact with it.

**First, let's add a section to show the status of our agents:**

```jsx

Agents

{agents && agents.map((agent, index) => (

{agent.name}

{agent.status}

))}

```

This code displays a list of agents, showing each agent's name and current status. It provides visibility into which agents are active and what they're doing.

**Next, let's display the tasks that our agents are working on:**

```jsx

Tasks

{tasks && tasks.map((task, index) => (

{task.title}

{task.status}

))}

```

This code creates a list of tasks, showing the title and current status of each task. It helps users understand what steps are involved in generating the blog post.

**Finally, let's add a section to display statistics about the blog post generation process:**

```jsx

Stats

{stats ? (

Total Tokens:

{stats.totalTokenCount}

Total Cost:

${stats.totalCost.toFixed(4)}

Duration:

{stats.duration} ms

) : (

ℹ️ No stats generated yet.

{blogPost ? (

{blogPost}

) : (

ℹ️ No blog post available yet Enter a topic and click 'Generate' to see results here.

)}

AI Agents News Blogging Team

Generate

Status {teamWorkflowStatus}

{/* Generated Blog Post */}

{blogPost ? (

blogPost

) : (

ℹ️ No blog post available yet Enter a topic and click 'Generate' to see results here.

)}

{/* We'll add more UI elements in the next steps */}

{/* Agents Here */}

{/* Tasks Here */}

{/* Stats Here */}

);

}

```

This basic structure sets up our component with state management and a simple UI. Let's break it down step-by-step:

### Step 1: Setting up State

We use the `useState` hook to manage our component's state:

```js

const [topic, setTopic] = useState('');

const [blogPost, setBlogPost] = useState('');

const [stats, setStats] = useState(null);

```

These state variables will hold the user's input topic, the generated blog post, and statistics about the generation process.

### Step 2: Connecting to the KaibanJS Store

We use the `const useTeamStore = blogTeam.useStore();` to access the current state of our AI team:

```js

const {

agents,

tasks,

teamWorkflowStatus

} = useTeamStore(state => ({

agents: state.agents,

tasks: state.tasks,

teamWorkflowStatus: state.teamWorkflowStatus

}));

```

This allows us to track the status of our agents, tasks, and overall workflow.

### Step 3: Implementing the Blog Post Generation Function

Now, let's implement the `generateBlogPost` function:

```js

const generateBlogPost = async () => {

setBlogPost('');

setStats(null);

try {

const output = await blogTeam.start({ topic });

if (output.status === 'FINISHED') {

setBlogPost(output.result);

const { costDetails, llmUsageStats, duration } = output.stats;

setStats({

duration: duration,

totalTokenCount: llmUsageStats.inputTokens + llmUsageStats.outputTokens,

totalCost: costDetails.totalCost

});

} else if (output.status === 'BLOCKED') {

console.log(`Workflow is blocked, unable to complete`);

}

} catch (error) {

console.error('Error generating blog post:', error);

}

};

```

This function starts the KaibanJS workflow, updates the blog post and stats when finished, and handles any errors.

### Step 4: Implementing UX Best Practices for System Feedback

In this section, we'll implement UX best practices to enhance how the system provides feedback to users. By refining the UI elements that communicate internal processes, activities, and statuses, we ensure that users remain informed and engaged, maintaining a clear understanding of the application's operations as they interact with it.

**First, let's add a section to show the status of our agents:**

```js

Agents

{agents && agents.map((agent, index) => (

{agent.name}

{agent.status}

))}

```

This code displays a list of agents, showing each agent's name and current status. It provides visibility into which agents are active and what they're doing.

**Next, let's display the tasks that our agents are working on:**

```js

Tasks

{tasks && tasks.map((task, index) => (

{task.title}

{task.status}

))}

```

This code creates a list of tasks, showing the title and current status of each task. It helps users understand what steps are involved in generating the blog post.

**Finally, let's add a section to display statistics about the blog post generation process:**

```js

Stats

{stats ? (

Total Tokens:

{stats.totalTokenCount}

Total Cost:

${stats.totalCost.toFixed(4)}

Duration:

{stats.duration} ms

) : (

ℹ️ No stats generated yet.

{blogPost ? (

{blogPost}

) : (

ℹ️ No blog post available yet Enter a topic and click 'Generate' to see results here.

)}

Task Statuses

{tasks.map(task => (

{task.description}: Status - {task.status}

))}

);

};

export default TaskStatusComponent;

```

### Integration Examples

To help you get started quickly, here are examples of KaibanJS integrated with different JavaScript frameworks:

- **NodeJS + KaibanJS:** Enhance your backend services with AI capabilities. [Try it on CodeSandbox](https://codesandbox.io/p/github/darielnoel/KaibanJS-NodeJS/main).

- **React + Vite + KaibanJS:** Build dynamic frontends with real-time AI features. [Explore on CodeSandbox](https://codesandbox.io/p/github/darielnoel/KaibanJS-React-Vite/main).

## Conclusion

Integrating KaibanJS with your preferred JavaScript framework unlocks powerful possibilities for enhancing your applications with AI-driven interactions and functionalities. Whether you're building a simple interactive UI in React or managing complex backend logic in Node.js, KaibanJS provides the tools you need to embed sophisticated AI capabilities into your projects.

:::tip[We Love Feedback!]

Is there something unclear or quirky in the docs? Maybe you have a suggestion or spotted an issue? Help us refine and enhance our documentation by [submitting an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues). We’re all ears!

:::

### ./src/how-to/04-Implementing RAG with KaibanJS.md

//--------------------------------------------

// File: ./src/how-to/04-Implementing RAG with KaibanJS.md

//--------------------------------------------

---

title: Implementing a RAG tool

description: Learn to enhance your AI projects with the power of Retrieval Augmented Generation (RAG). This step-by-step tutorial guides you through creating the WebRAGTool in KaibanJS, enabling your AI agents to access and utilize web-sourced, context-specific data with ease.

---

In this hands-on tutorial, we'll build a powerful WebRAGTool that can fetch and process web content dynamically, enhancing your AI agents' ability to provide accurate and contextually relevant responses.

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

We will be using:

- [KaibanJS](https://kaibanjs.com/): For Agents Orchestration.

- [LangChain](https://js.langchain.com/docs/introduction/): For the WebRAGTool Creation.

- [OpenAI](https://openai.com/): For LLM inference and Embeddings.

- [React](https://reactjs.org/): For the UI.

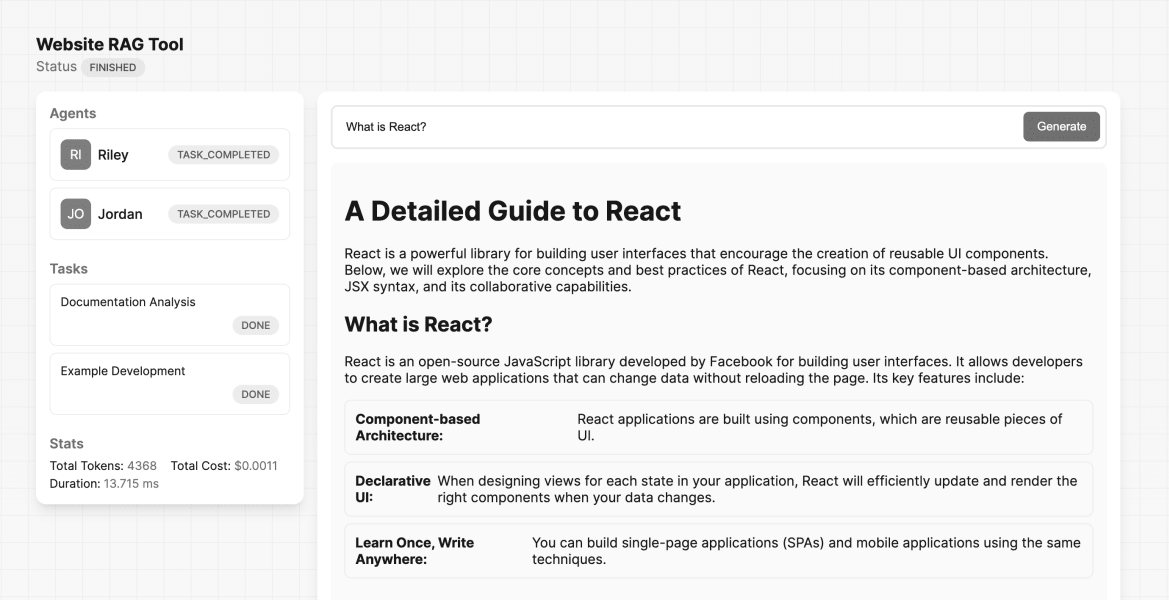

## Final Result

A basic React App that uses the WebRAGTool to answer questions about the React documentation website.

:::tip[For the Lazy: Jump Right In!]

Ready to dive straight into the code? You can find the complete project on CodeSandbox by following the link below:

[View the completed project on a CodeSandbox](https://stackblitz.com/~/github.com/kaiban-ai/kaibanjs-web-rag-tool-demo)

Feel free to explore the project and return to this tutorial for a detailed walkthrough!

:::

## Parts of the Tutorial

:::note[Note]

This tutorial assumes you have a basic understanding of the KaibanJS framework and custom tool creation. If you're new to these topics, we recommend reviewing the following resources before proceeding:

- [Getting Started with KaibanJS](/category/get-started)

- [Creating Custom Tools in KaibanJS](/tools-docs/custom-tools/Create%20a%20Custom%20Tool)

These guides will provide you with the foundational knowledge needed to make the most of this tutorial.

:::

On this tutorial we will:

1. **Explain Key Components of a RAG Tool:**

A RAG (Retrieval Augmented Generation) tool typically consists of several key components that work together to enhance the capabilities of language models. Understanding these components is crucial before we dive into building our WebRAGTool.

2. **Create a WebRAGTool:**

The Tool will fetch content from specified web pages. Processes and indexes this content. Uses the indexed content to answer queries with context-specific information.

3. **Test the WebRAGTool:**

We'll create a simple test to verify that the WebRAGTool works as expected.

4. **Integrate the WebRAGTool into your KaibanJS Environment:**

We'll create AI agents that utilize the WebRAGTool.These agents will be organized into a KaibanJS team, demonstrating multi-agent collaboration.

5. **Create a simple UI:**

We will point you to an existing example project that uses React to create a simple UI for interacting with the WebRAGTool.

Let's Get Started.

## Key Components of a RAG Tool

Before we start building the WebRAGTool, let's understand the key components that make it work:

| Component | Description | Example/Usage in Tutorial |

|-----------|-------------|---------------------------|

| Source | Where information is obtained for processing and storage. Your knowledge base. PDFs, Web PAges, Excell, API, etc. | Web pages (HTML content) |

| Vector Storage | Specialized database for storing and retrieving high-dimensional vectors representing semantic content | In-memory storage |

| Operation Type | How we interact with vector storage and source | Write: Indexing web content - Read: Querying for answers |

| LLM (Large Language Model) | AI model that processes natural language and generates responses | gpt-4o-mini |

| Embedder | Converts text into vector representations capturing semantic meaning | OpenAIEmbeddings |

#### By combining these components, our WebRAGTool will be able to:

- fetch web content,

- convert it into searchable vector form.

- store it efficiently.

- use it to generate informed responses to user queries.

Now that we've covered the key components of our RAG system, let's dive into the implementation steps.

## Create a WebRAGTool

### 1. Setting Up the Environment

First, let's install the necessary dependencies and set up our project:

```bash

npm install kaibanjs @langchain/core @langchain/community @langchain/openai zod cheerio

```

Now, create a new file called `WebRAGTool.js` and add the following imports and class structure:

```javascript

import { Tool } from '@langchain/core/tools';

import 'cheerio';

import { CheerioWebBaseLoader } from '@langchain/community/document_loaders/web/cheerio';

import { RecursiveCharacterTextSplitter } from 'langchain/text_splitter';

import { OpenAIEmbeddings, ChatOpenAI } from '@langchain/openai';

import { createStuffDocumentsChain } from 'langchain/chains/combine_documents';

import { StringOutputParser } from '@langchain/core/output_parsers';

import { MemoryVectorStore } from 'langchain/vectorstores/memory';

import { ChatPromptTemplate } from '@langchain/core/prompts';

import { z } from 'zod';

export class WebRAGTool extends Tool {

constructor(fields) {

super(fields);

this.url = fields.url;

this.name = 'web_rag';

this.description = 'This tool implements Retrieval Augmented Generation (RAG) by dynamically fetching and processing web content from a specified URL to answer user queries.';

this.schema = z.object({

query: z.string().describe('The query for which to retrieve and generate answers.'),

});

}

async _call(input) {

try {

// Create Source Loader Here

// Implement Vector Storage Here

// Configure LLM and Embedder Here

// Build RAG Pipeline Here

// Generate and Return Response Here

} catch (error) {

console.error('Error running the WebRAGTool:', error);

throw error;

}

}

}

```

This boilerplate sets up the basic structure of our `WebRAGTool` class. We've included placeholders for each major component we'll be implementing in the subsequent steps. This approach provides a clear roadmap for what we'll be building and where each piece fits into the overall structure.

### 2. Creating the Source Loader

In this step, we set up the source loader to fetch and process web content. We use CheerioWebBaseLoader to load HTML content from the specified URL, and then split it into manageable chunks using RecursiveCharacterTextSplitter. This splitting helps in processing large documents while maintaining context.

Replace the "Create Source Loader Here" comment with the following code:

```js

// Create Source Loader Here

const loader = new CheerioWebBaseLoader(this.url);

const docs = await loader.load();

const textSplitter = new RecursiveCharacterTextSplitter({

chunkSize: 1000,

chunkOverlap: 200,

});

const splits = await textSplitter.splitDocuments(docs);

```

### 3. Implementing the Vector Storage

Here, we create a vector store to efficiently store and retrieve our document chunks. We use MemoryVectorStore for in-memory storage and OpenAIEmbeddings to convert our text chunks into vector representations. This allows for semantic search and retrieval of relevant information.

Replace the "Implement Vector Storage Here" comment with:

```js

// Implement Vector Storage Here

const vectorStore = await MemoryVectorStore.fromDocuments(

splits,

new OpenAIEmbeddings({

apiKey: import.meta.env.VITE_OPENAI_API_KEY,

})

);

const retriever = vectorStore.asRetriever();

```

### 4. Configuring the LLM and Embedder

In this step, we initialize the language model (LLM) that will generate responses based on the retrieved information. We're using OpenAI's ChatGPT model here. Note that the embedder was already configured in the vector store creation step.

Replace the "Configure LLM and Embedder Here" comment with:

```js

// Configure LLM and Embedder Here

const llm = new ChatOpenAI({

model: 'gpt-4o-mini',

apiKey: import.meta.env.VITE_OPENAI_API_KEY,

});

```

### 5. Building the RAG Pipeline

Now we create the RAG (Retrieval-Augmented Generation) pipeline. This involves setting up a prompt template to structure input for the language model and creating a chain that combines the LLM, prompt, and document processing.

Replace the "Build RAG Pipeline Here" comment with:

```js

// Build RAG Pipeline Here

const prompt = ChatPromptTemplate.fromTemplate(`

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:`);

const ragChain = await createStuffDocumentsChain({

llm,

prompt,

outputParser: new StringOutputParser(),

});

```

### 6. Generate and Return Response

Finally, we use our RAG pipeline to generate a response. This involves retrieving relevant documents based on the input query and then using the RAG chain to generate a response that combines the query with the retrieved context.

Replace the "Generate and Return Response Here" comment with:

```js

// Generate and Return Response Here

const retrievedDocs = await retriever.invoke(input.query);

const response = await ragChain.invoke({

question: input.query,

context: retrievedDocs,

});

return response;

```

### Complete WebRAGTool Implementation

After following the detailed step-by-step explanation and building each part of the `WebRAGTool`, here is the complete code for the tool. You can use this to verify your implementation or as a quick start to copy and paste into your project:

```javascript

import { Tool } from '@langchain/core/tools';

import 'cheerio';

import { CheerioWebBaseLoader } from '@langchain/community/document_loaders/web/cheerio';

import { RecursiveCharacterTextSplitter } from 'langchain/text_splitter';

import { OpenAIEmbeddings, ChatOpenAI } from '@langchain/openai';

import { createStuffDocumentsChain } from 'langchain/chains/combine_documents';

import { StringOutputParser } from '@langchain/core/output_parsers';

import { MemoryVectorStore } from 'langchain/vectorstores/memory';

import { ChatPromptTemplate } from '@langchain/core/prompts';

import { z } from 'zod';

export class WebRAGTool extends Tool {

constructor(fields) {

super(fields);

// Store the URL from which to fetch content

this.url = fields.url;

// Define the tool's name and description

this.name = 'web_rag';

this.description =

'This tool implements Retrieval Augmented Generation (RAG) by dynamically fetching and processing web content from a specified URL to answer user queries. It leverages external web sources to provide enriched responses that go beyond static datasets, making it ideal for applications needing up-to-date information or context-specific data. To use this tool effectively, specify the target URL and query parameters, and it will retrieve relevant documents to generate concise, informed responses based on the latest content available online';

// Define the schema for the input query using Zod for validation

this.schema = z.object({

query: z

.string()

.describe('The query for which to retrieve and generate answers.'),

});

}

async _call(input) {

try {

// Step 1: Load Content from the Specified URL

const loader = new CheerioWebBaseLoader(this.url);

const docs = await loader.load();

// Step 2: Split the Loaded Documents into Chunks

const textSplitter = new RecursiveCharacterTextSplitter({

chunkSize: 1000,

chunkOverlap: 200,

});

const splits = await textSplitter.splitDocuments(docs);

// Step 3: Create a Vector Store from the Document Chunks

const vectorStore = await MemoryVectorStore.fromDocuments(

splits,

new OpenAIEmbeddings({

apiKey: import.meta.env.VITE_OPENAI_API_KEY,

})

);

// Step 4: Initialize a Retriever

const retriever = vectorStore.asRetriever();

// Step 5: Define the Prompt Template for the Language Model

const prompt = ChatPromptTemplate.fromTemplate(`

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:`);

// Step 6: Initialize the Language Model (LLM)

const llm = new ChatOpenAI({

model: 'gpt-4o-mini',

apiKey: import.meta.env.VITE_OPENAI_API_KEY,

});

// Step 7: Create the RAG Chain

const ragChain = await createStuffDocumentsChain({

llm,

prompt,

outputParser: new StringOutputParser(),

});

// Step 8: Retrieve Relevant Documents Based on the User's Query

const retrievedDocs = await retriever.invoke(input.query);

// Step 9: Generate the Final Response

const response = await ragChain.invoke({

question: input.query,

context: retrievedDocs,

});

// Step 10: Return the Generated Response

return response;

} catch (error) {

// Log and rethrow any errors that occur during the process

console.error('Error running the WebRAGTool:', error);

throw error;

}

}

}

```

This complete code snippet is ready to be integrated into your project. It encompasses all the functionality discussed in the tutorial, from fetching and processing data to generating responses based on the retrieved information.

## Testing the WebRAGTool

Once you have implemented the `WebRAGTool`, testing it is crucial to ensure it functions as intended. Below is a step-by-step guide on how to set up and run a simple test. This test mimics a realistic use-case, similar to how an agent might invoke the tool within the `KaibanJS` framework once it is integrated into an AI team.

First, ensure that you have the tool script in your project. Here’s how you import the `WebRAGTool` that you've developed:

```javascript

import { WebRAGTool } from './WebRAGTool'; // Adjust the path as necessary

```

Next, define a function to test the tool by executing a query through the RAG process:

```javascript

async function testTool() {

// Create an instance of the WebRAGTool with a specific URL

const tool = new WebRAGTool({

url: 'https://react.dev/',

});

// Invoke the tool with a query and log the output

const queryResponse = await tool.invoke({ query: "What is React?" });

console.log(queryResponse);

}

testTool();

```

**Example Console Output:**

```

React is a JavaScript library for building user interfaces using components, allowing developers to create dynamic web applications. It emphasizes the reuse of components to build complex UIs in a structured manner. Additionally, React fosters a large community, enabling collaboration and support among developers and designers.

```

The console output provided is an example of the potential result when using RAG to enhance query responses. It illustrates the tool's capability to provide detailed and context-specific information, which is critical for building more knowledgeable and responsive AI systems. Remember, the actual output may vary depending on updates to the source content and modifications in the processing logic of your tool.

## Integrating the WebRAGTool into Your KaibanJS Environment

Once you have developed the `WebRAGTool`, integrating it into your KaibanJS environment involves a few key steps that link the tool to an agent capable of utilizing its capabilities. This integration ensures that your AI agents can effectively use the RAG functionality to enhance their responses based on the latest web content.

### Step 1: Import the Tool

First, ensure the tool is accessible within your project by importing it where you plan to use it:

```javascript

import { WebRAGTool } from './WebRAGTool'; // Adjust the path as necessary based on your project structure

```

### Step 2: Initialize the Tool

Create an instance of the `WebRAGTool`, specifying the URL of the data source you want the tool to retrieve information from. This URL should point to the web content relevant to your agent's queries:

```javascript

const webRAGTool = new WebRAGTool({

url: 'https://react.dev/' // Specify the URL to fetch content from, tailored to your agent's needs

});

```

### Step 3: Assign the Tool to an Agent

With the tool initialized, the next step is to assign it to an agent. This involves creating an agent and configuring it to use this tool as part of its resources to answer queries. Here, we configure an agent whose role is to analyze and summarize documentation:

```javascript

import { Agent, Task, Team } from 'kaibanjs';

const docAnalystAgent = new Agent({

name: 'Riley',

role: 'Documentation Analyst',

goal: 'Analyze and summarize key sections of React documentation',

background: 'Expert in software development and technical documentation',

tools: [webRAGTool] // Assign the WebRAGTool to this agent

});

```

By following these steps, you seamlessly integrate RAG into your KaibanJS application, enabling your agents to utilize dynamically retrieved web content to answer queries more effectively. This setup not only makes your agents more intelligent and responsive but also ensures that they can handle queries with the most current data available, enhancing user interaction and satisfaction.

### Step 4: Integrate the Team into a Real Application

After setting up your individual agents and their respective tools, the next step is to combine them into a team that can be integrated into a real-world application. This demonstrates how different agents with specialized skills can work together to achieve complex tasks.

Here's how you can define a team of agents using the `KaibanJS` framework and prepare it for integration into an application:

```javascript

import { Agent, Task, Team } from 'kaibanjs';

import { WebRAGTool } from './tool';

const webRAGTool = new WebRAGTool({

url: 'https://react.dev/',

});

// Define the Documentation Analyst Agent

const docAnalystAgent = new Agent({

name: 'Riley',

role: 'Documentation Analyst',

goal: 'Analyze and summarize key sections of React documentation',

background: 'Expert in software development and technical documentation',

tools: [webRAGTool], // Include the WebRAGTool in the agent's tools

});

// Define the Developer Advocate Agent

const devAdvocateAgent = new Agent({

name: 'Jordan',

role: 'Developer Advocate',

goal: 'Provide detailed examples and best practices based on the summarized documentation',

background: 'Skilled in advocating and teaching React technologies',

tools: [],

});

// Define Tasks

const analysisTask = new Task({

title: 'Documentation Analysis',

description: 'Analyze the React documentation sections related to {topic}',

expectedOutput: 'A summary of key features and updates in the React documentation on the given topic',

agent: docAnalystAgent,

});

const exampleTask = new Task({

title: 'Example Development',

description: 'Provide a detailed explanation of the analyzed documentation',

expectedOutput: 'A detailed guide with examples and best practices in Markdown format',

agent: devAdvocateAgent,

});

// Create the Team

const reactDevTeam = new Team({

name: 'AI React Development Team',

agents: [docAnalystAgent, devAdvocateAgent],

tasks: [analysisTask, exampleTask],

env: { OPENAI_API_KEY: import.meta.env.VITE_OPENAI_API_KEY }

});

export { reactDevTeam };

```

**Using the Team in an Application:**

Now that you have configured your team, you can integrate it into an application. This setup allows the team to handle complex queries about React, processing them through the specialized agents to provide comprehensive answers and resources.

For a practical demonstration, revisit the interactive example we discussed earlier in the tutorial:

[View the example project on CodeSandbox](https://stackblitz.com/~/github.com/kaiban-ai/kaibanjs-web-rag-tool-demo)

This link leads to the full project setup where you can see the team in action. You can run queries, observe how the agents perform their tasks, and get a feel for the interplay of different components within a real application.

## Conclusion

By following this tutorial, you've learned how to create a custom RAG tool that fetches and processes web content, enhancing your AI's ability to provide accurate and contextually relevant responses.

This comprehensive guide should give you a thorough understanding of building and integrating a RAG tool in your AI applications. If you have any questions or need further clarification on any step, feel free to ask!

## Acknowledgments

Thanks to [@Valdozzz](https://twitter.com/valdozzz1) for suggesting this valuable addition. Your contributions help drive innovation within our community!

## Feedback

:::tip[We Love Feedback!]

Is there something unclear or quirky in this tutorial? Have a suggestion or spotted an issue? Help us improve by [submitting an issue on GitHub](https://github.com/kaiban-ai/KaibanJS/issues). Your input is valuable!

:::

### ./src/how-to/05-Using Typescript.md

//--------------------------------------------

// File: ./src/how-to/05-Using Typescript.md

//--------------------------------------------

---

title: Using Typescript

description: KaibanJS is type supported. You can use TypeScript to get better type checking and intellisense with powerful IDEs like Visual Studio Code.

---

## Setup

To start using typescript, you need to install the typescript package:

```bash

npm install typescript --save-dev

```

You may optionally create a custom tsconfig file to configure your typescript settings. A base settings file looks like this:

```json

{

"compilerOptions": {

"noEmit": true,

"strict": true,

"module": "NodeNext",

"moduleResolution": "NodeNext",

"esModuleInterop": true,

"skipLibCheck": true

},

"exclude": ["node_modules"]

}

```

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

Now you can follow the, [Quick Start](/docs/get-started/01-Quick%20Start.md) guide to get started with KaibanJS using TypeScript.

## Types

Base classes are already type suported and you can import them like below:-

```typescript

import { Agent, Task, Team } from "kaibanjs";

```

For any other specific types, can call them like below:-

```typescript

import type { IAgentParams, ITaskParams } from "kaibanjs";

```

## Learn more

This guide has covered the basics of setting up TypeScript for use with KaibanJS. But if you want to learn more about TypeScript itself, there are many resources available to help you.

We recommend the following resources:

- [TypeScript Handbook](https://www.typescriptlang.org/docs/handbook/intro.html) - The TypeScript handbook is the official documentation for TypeScript, and covers most key language features.

- [TypeScript Discord](https://discord.com/invite/typescript) - The TypeScript Community Discord is a great place to ask questions and get help with TypeScript issues.

### ./src/how-to/06-API Key Management.md

//--------------------------------------------

// File: ./src/how-to/06-API Key Management.md

//--------------------------------------------

---

title: API Key Management

description: Learn about the pros and cons of using API keys in browser-based applications with KaibanJS. Understand when it's acceptable, the potential risks, and best practices for securing your keys in production environments.

---

# API Key Management

Managing API keys securely is one of the most important challenges when building applications that interact with third-party services, like Large Language Models (LLMs). With KaibanJS, we offer a flexible approach to handle API keys based on your project's stage and security needs, while providing a proxy boilerplate to ensure best practices.

This guide will cover:

1. **The pros and cons of using API keys in the browser**.

2. **Legitimate use cases** for browser-based API key usage.

3. **Secure production environments** using our **Kaiban LLM Proxy**.

:::tip[Using AI Development Tools?]

Our documentation is available in an LLM-friendly format at [docs.kaibanjs.com/llms-full.txt](https://docs.kaibanjs.com/llms-full.txt). Feed this URL directly into your AI IDE or coding assistant for enhanced development support!

:::

---

## API Key Handling with KaibanJS

When working with third-party APIs like OpenAI, Anthropic, and Google Gemini, you need to authenticate using API keys. KaibanJS supports two approaches to handle API keys:

### 1. Developer-Owned Keys (DOK)

This is the "move fast and break things" mode, where developers can provide API keys directly in the browser. This approach is recommended for:

- Rapid prototyping

- Local development

- Quick demos or hackathons

- Personal projects with limited risk

**Benefits**:

- **Fast setup**: No need to set up a server or proxy, allowing for quick iteration.

- **Direct interaction**: Makes testing and development easier, with the ability to communicate directly with the API.

**Drawbacks**:

- **Security risks**: Exposes API keys to the browser, allowing them to be easily viewed and potentially abused.

- **Limited to development environments**: Not recommended for production use.

### 2. Proxy Setup for Production

When building production-grade applications, exposing API keys in the frontend is a significant security risk. KaibanJS recommends using a backend proxy to handle API requests securely.

The **Kaiban LLM Proxy** offers a pre-built solution to ensure your API keys are hidden while still allowing secure, efficient communication with the LLM providers.

**Benefits**:

- **API keys are protected**: They remain on the server and never reach the frontend.

- **Vendor compliance**: Some LLM providers restrict frontend API access, requiring server-side communication.

- **Improved security**: You can add rate-limiting, request logging, and other security features to the proxy.

---

## What are API Keys?

API keys are unique identifiers provided by third-party services that allow access to their APIs. They authenticate your requests and often have usage quotas. In a production environment, these keys must be protected to prevent unauthorized use and abuse.

---

## Is It Safe to Use API Keys in the Browser?

Using API keys directly in the browser is convenient for development but risky in production. Browser-exposed keys can be easily viewed in developer tools, potentially leading to abuse or unauthorized access.

### Pros of Using API Keys in the Browser

1. **Ease of Setup**: Ideal for rapid prototyping, where speed is a priority.

2. **Direct Communication**: Useful when you want to quickly test API interactions without setting up backend infrastructure.

3. **Developer Flexibility**: Provides a way for users to supply their own API keys in scenarios like BYOAK (Bring Your Own API Key).

### Cons of Using API Keys in the Browser

1. **Security Risks**: Keys exposed in the browser can be easily stolen or misused.

2. **Provider Restrictions**: Some LLM providers, such as OpenAI and Anthropic, may restrict API key usage to backend-only.

3. **Lack of Control**: Without a backend, it's harder to manage rate-limiting, request logging, or prevent abuse.

---

## Legitimate Use Cases for Browser-Based API Keys

There are scenarios where using API keys in the browser is acceptable:

1. **Internal Tools or Demos**: Trusted internal environments or demos, where the risk of key exposure is low.

2. **BYOAK (Bring Your Own API Key)**: If users are supplying their own keys, it may be acceptable to use them in the browser, as they control their own credentials.

3. **Personal Projects**: For small-scale or personal applications where security risks are minimal.

4. **Non-Critical APIs**: For APIs with low security risks or restricted access, where exposing keys is less of a concern.

---

## Secure Production Setup: The Kaiban LLM Proxy

The **Kaiban LLM Proxy** is an open-source utility designed to serve as a **starting point** for securely handling API requests to multiple Large Language Model (LLM) providers. While Kaiban LLM Proxy provides a quick and easy solution, you are free to build or use your own proxies using your preferred technologies, such as **AWS Lambda**, **Google Cloud Functions**, or **other serverless solutions**.

- **Repository URL**: [Kaiban LLM Proxy GitHub Repo](https://github.com/kaiban-ai/kaiban-llm-proxy)

This proxy example is intended to demonstrate a simple and secure way to manage API keys, but it is not the only solution. You can clone the repository to get started or adapt the principles outlined here to build a proxy using your chosen stack or infrastructure.

### Cloning the Proxy

To explore or modify the Kaiban LLM Proxy, you can clone the repository:

```bash

git clone https://github.com/kaiban-ai/kaiban-llm-proxy.git

cd kaiban-llm-proxy

npm install

npm run dev

```

The proxy is flexible and can be deployed or adapted to other environments. You can create your own proxy in your preferred technology stack, providing full control over security, scalability, and performance.

---

## Best Practices for API Key Security

1. **Use Environment Variables**: Always store API keys in environment variables, never hardcode them in your codebase.

2. **Set Up a Proxy**: Use the Kaiban LLM Proxy for production environments to ensure API keys are never exposed.

3. **Monitor API Usage**: Implement logging and monitoring to track usage patterns and detect any abnormal activity.

4. **Use Rate Limiting**: Apply rate limiting on your proxy to prevent abuse or overuse of API resources.

---

## Conclusion

KaibanJS offers the flexibility to use API keys in the browser during development while providing a secure path to production with the **Kaiban LLM Proxy**. For rapid development, the **DOK approach** allows you to move quickly, while the **proxy solution** ensures robust security for production environments.

By leveraging KaibanJS and the proxy boilerplate, you can balance speed and security throughout the development lifecycle. Whether you’re building a quick demo or a production-grade AI application, we’ve got you covered.

### ./src/how-to/07-Deployment Options.md

//--------------------------------------------

// File: ./src/how-to/07-Deployment Options.md

//--------------------------------------------

---

title: Deploying Your Kaiban Board

description: Learn how to deploy your Kaiban Board, a Vite-based single-page application, to various hosting platforms.

---

# Deploying Your Kaiban Board

Want to get your board online quickly? From your project's root directory, run:

```bash

npm run kaiban:deploy

```

This command will automatically build and deploy your board to Vercel's global edge network. You'll receive a unique URL for your deployment, and you can configure a custom domain later if needed.

:::tip[Using AI Development Tools?]